Thinking Inductively

It's hard to argue with the massive scalability one can achieve with functional programming. I've done just enough in Erlang, Scala, and Lisp/Scheme to appreciate the benefits. Much of that "freedom to run" comes with stateless flows and stackless recursion. But more than a change of language, it really requires a change of thinking.

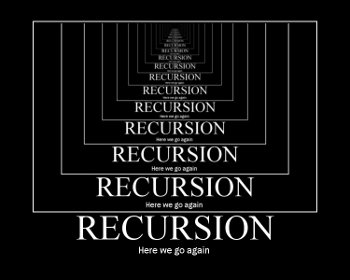

The classic examples in functional programming languages often employ inductive techniques, since that's where they shine and demonstrate elegance. If you want to compute an exponent, prime, or Fibonacci series; stack a Hanoi tower; or even walk a tree (like an XML DOM), you can usually do it in a few lines of clever inductive code. And with a functional language, poor recursive performance and stack overflows are typically not a problem (Scala being one exception). If you're not in a functional language and want to use the same algorithms without these risks, you're left with the exercise of converting tail recursion to iteration. That's much like watching movies on your phone: it's not hard, just much less interesting.

That's why I dabble periodically in functional languages: to try to keep my waning inductive brain cells alive. It sometimes pays off at work, too: I used induction just yesterday to simplify (and recurse) a clumsy and confusing method. Inductive techniques are not the golden hammer that some would believe, but they do have their "best fit" use cases.

Given our current software crisis of how to go truly parallel (and keep all those cores we're now getting busy), some would argue that stateless functional programming is where we should start. Stateless is fine for tasks that can live in their own sandboxes and play with their own toys, but most solutions have to share with others. We need a unified blend of stateless operation, classic resource control, and higher-level concurrency semantics (no threads or synchronized keywords please, that's the assembly language of the 21st century). More on this later.